Prior Swapping

Efficient algorithms for incorporating prior information, post-inference.

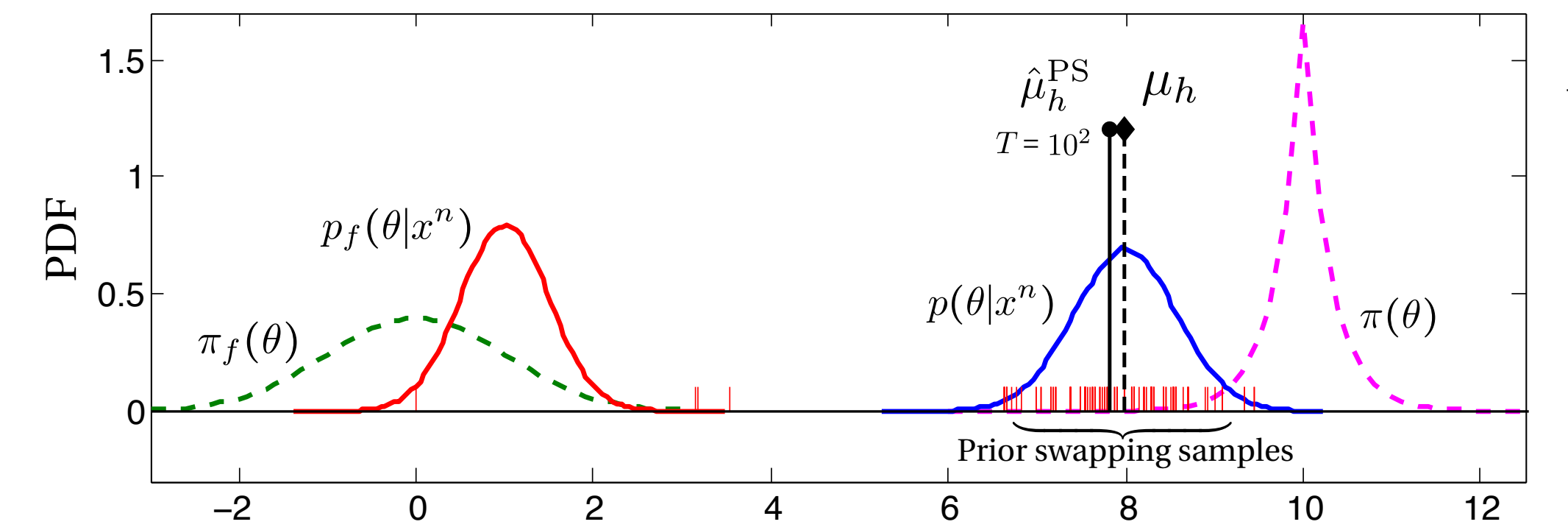

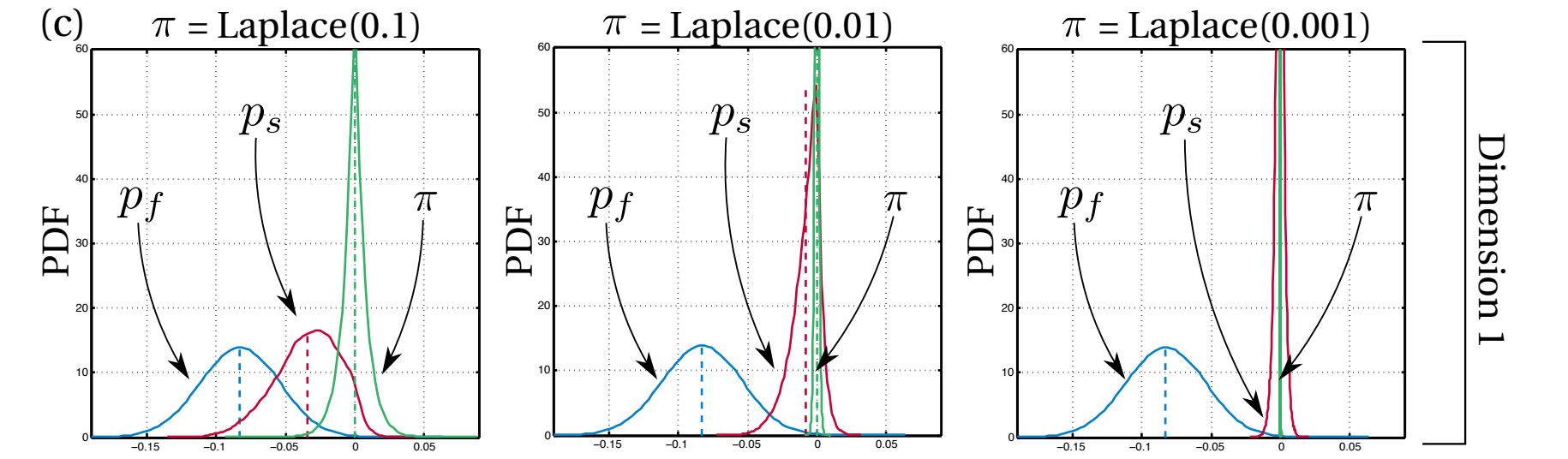

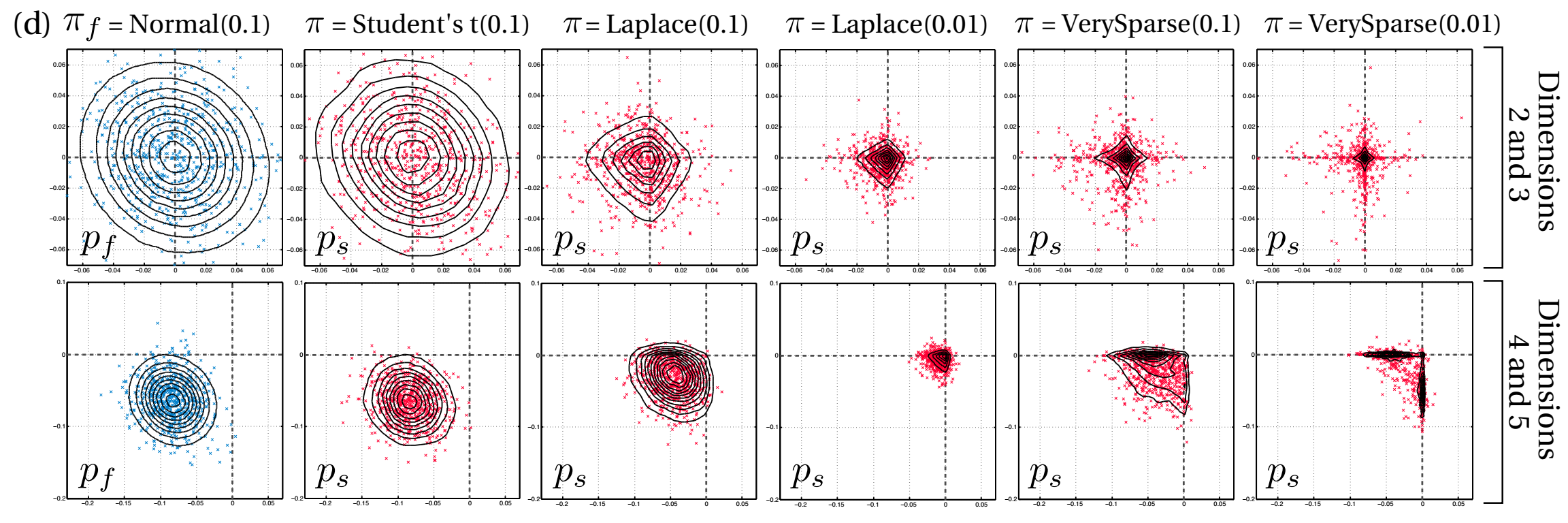

While Bayesian methods are praised for their ability to incorporate useful prior knowledge, in practice, convenient priors that allow for computationally cheap or tractable inference are commonly used. In this paper, we investigate the following question: for a given model, is it possible to compute an inference result with any convenient false prior, and afterwards, given any target prior of interest, quickly transform this result into the target posterior?

A potential solution is to use importance sampling (IS). However, we demonstrate that IS will fail for many choices of the target prior, depending on its parametric form and similarity to the false prior. Instead, we propose prior swapping, a method that leverages the pre-inferred false posterior to efficiently generate accurate posterior samples under arbitrary target priors. Prior swapping lets us apply less-costly inference algorithms to certain models, and incorporate new or updated prior information "post-inference". We give theoretical guarantees about our method, and demonstrate it empirically on a number of models and priors.

Read the Paper

Post-Inference Prior Swapping

[pdf].

Willie Neiswanger,

Eric Xing.

Accepted at ICML 2017.

Get the Code

The source code can be downloaded here [git; zip]. Please see the README for installation instructions.